It's been a while since I wrote my last docker related post and even longer since I last discussed docker on AWS and a lot has changed since then. For production deployment there are now the Amazon EC2 Container Service (ECS) as well as Google Container Engine (GCE) which can both help you easily deploy microservices based on docker with ease.

If, however, you need to comply with additional auditable security requirements the situation is a bit more murky and those services which can ease development might not be as acceptable to your auditors. I am going to outline my setup for a HIPAA compliant docker architecture on AWS. In some ways it is a bit of a throwback, but in other ways it allows you to embrace recent developments such as Docker Machine and Docker Compose. When it comes to HIPAA my main concerns are:

- Encryption of all data, including PHI, in motion.

- Encryption of all data, including PHI, at rest.

- Solid isolation of decrypted data from other programs running in AWS's cloud.

Fundamentally it is important to remember that on AWS you are always using a shared network, and unless you explicitly specify otherwise you are using shared servers. Therefore it is important both within and outside your VPC to treat all PHI as though it were being transferred and stored in a publicly accessible network.

Encryption in Motion

When running on AWS, I use a Virtual Private Cloud (VPC) to create a logical isolation of my internal network. I create public and private zones, and I keep PHI in the private zones. My private EC2 instances can create connections to the outside world via a NAT instance in the public zone of our network, and I can connect to my private EC2 instances using a Bastion server in my public zone. There are a lot of parameters to make a good VPC, including access control lists, security policies, and default tenancy requirements. In order to avoid any mistakes I use a CloudFormation definition of my VPC and update it as necessary. I can write an entire post about this process, however it is not the topic of this section.

The main thing to understand about VPC is that it creates a logical isolation of your resources. VPC does not encrypt your traffic as it is sent on the physical wires that connect real hosts. For this reason I encrypt traffic that is internal to my VPC as well as traffic which goes to and from the public internet. Traffic moving between docker containers on the same EC2 instance is allowed to be unencrypted.

I encrypt my network traffic using two mechanisms:

- HTTP over TLS

- SSH Tunneling

HTTP over TLS

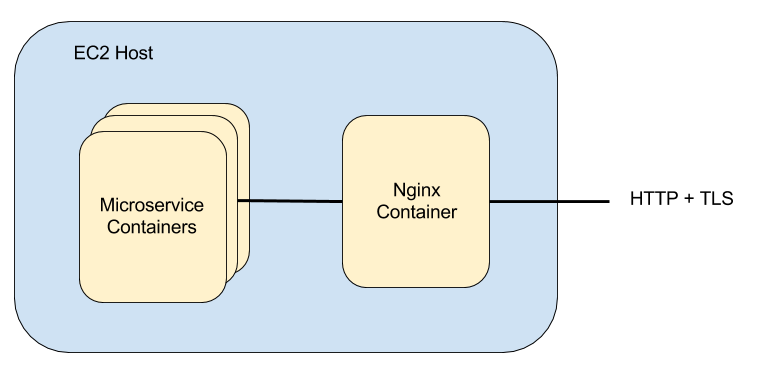

I primarily make use of a microservice based architecture with RESTful HTTP APIs. It is very straightforward to add a reverse-proxy Nginx container in front of each microservice to manage the TLS layer, which is typically what I do, as shown in the architecture diagram below.

In order to facilitate TLS connections, I create a certificate authority as well as a certificate revocation list and bundle the root certificate and CRL in the operating system-level base container image on which I build my microservice containers. I then provide each Nginx container with a separate certificate signed by my certificate authority. Should one of the certificate private keys escape into the wild, I can revoke the certificate, create a new certificate to replace the old one, and rebuild my containers.

I also create client certificates, signed by my certificate authority, to make sure that only authorized clients are connecting to the microservices I have deployed. Nginx checks for the presence of a client certificate, and passes on information about the certificate, if found and verified, to the microservice itself. The microservice can choose to respond if a certificate is verified, or if a particular certificate fingerprint is present. The particular configuration lines I use in Nginx to facilitate this are:

ssl_client_certificate /etc/nginx/certs/root-ca.crt;

ssl_crl /etc/nginx/certs/ca.crl;

ssl_verify_client optional;

location / {

proxy_pass http://service:8080/;

proxy_redirect off;

proxy_http_version 1.1;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Client-Verified $ssl_client_verify;

proxy_set_header X-Client-DN $ssl_client_s_dn;

proxy_set_header X-Client-Serial $ssl_client_serial;

proxy_set_header X-Client-Fingerprint $ssl_client_fingerprint;

}

SSH Tunneling

Most of the microservices I create can be hosted on a single EC2 instance, however there are times when I wish to separate resources that are part of one service on to multiple EC2 instances. A common example is when I wish to use multiple database instances located on redundant EC2 instances in separate availability zones.

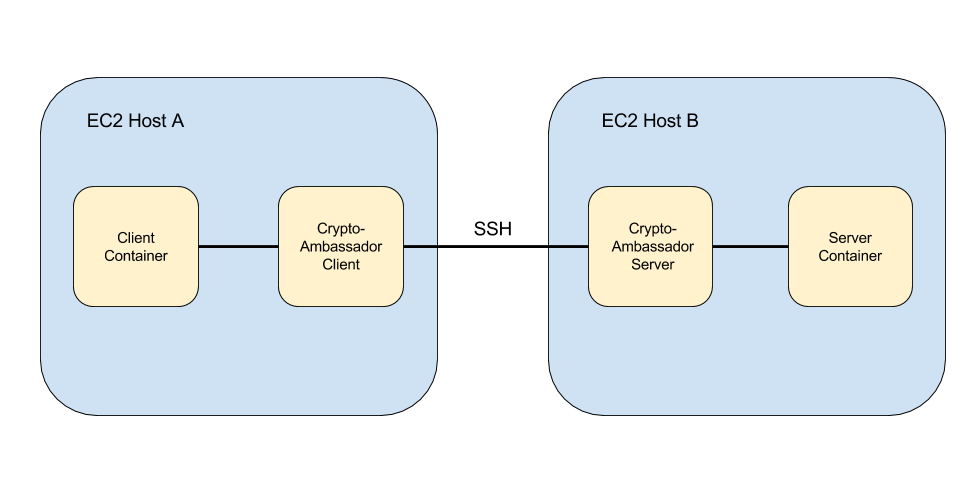

In these cases I use a variation on the ambassador pattern which embeds the links between EC2 instances in SSH tunnels managed by autossh. Each EC2 instance is already running an OpenSSH server, so it may seem natural to use those existing servers to manage the connections between EC2 instances, however I have chosen to roll the SSH server into the ambassador containers in order to provide an additional layer of separation between the infrastructure layer and application layer. The ambassador containers use their own SSH keys and certificates separate from those on the EC2 instances themselves. This somewhat limits the potential damage should an SSH key move beyond our control.

I call these ambassador containers crypto-ambassadors. Their creation is coordinated by a master EC2 node in the network and a redis store on that node is used to coordinate the relationship between Docker endpoints and EC2 instance ports and addresses. From the point of view of the client and server containers on the separate EC2 instances, the links appear as links on the local docker bridge and they do not need to concern themselves with any cryptography.

Encryption at Rest

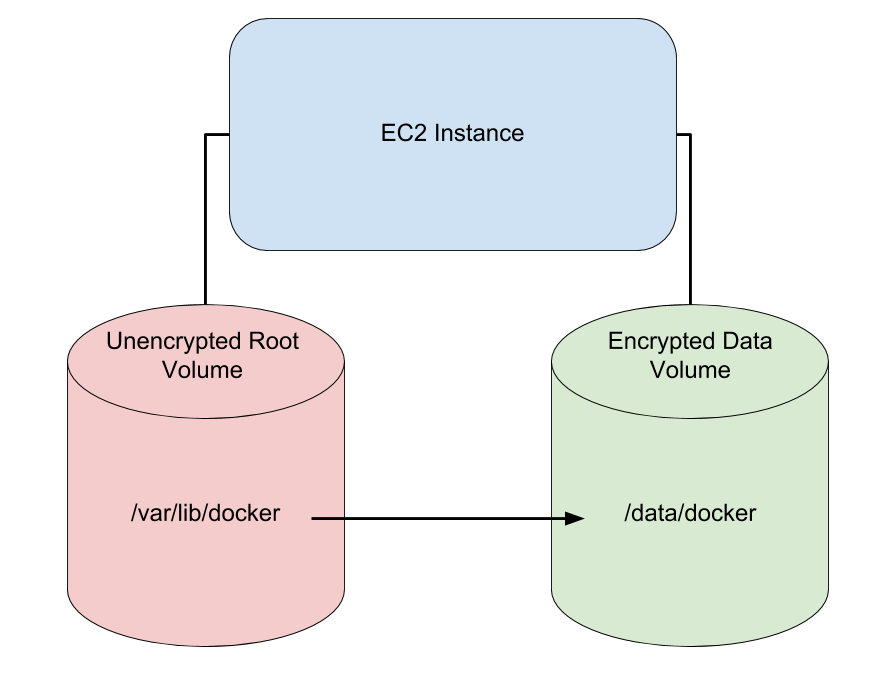

In order to encrypt the data at rest I use encryption at the application layer as well as at the infrastructure layer. The application layer encryption varies depending on the application, but the infrastructure layer encryption is handled using Amazon's Encrypted Elastic Block Storage. One important thing to note is that the root volume of an EC2 instance is never encrypted, so I always mount at least one additional EBS data volume with encryption enabled as in the figure below.

Since my data is in containers it is important that the docker volumes end up

on the encrypted data EBS rather than the unencrypted root EBS. I facilitate

this by creating the EBS in our CloudFormation script and creating a symbolic

link from /var/lib/docker to /data/docker in the User-Data section of the

CloudFormation script. Although conceptually simple, the devil is in the

details as it so often is with CloudFormation, so I have provided a sample

template.

- You can download the sample CloudFormation template: DockerMachineSetup.cfn.json

- You can launch the stack youself:

The template will allow you to create a single EC2 instance with an

unencrypted root volume, an encrypted data volume with a docker directory.

It will have access to an S3 bucket you specify, which I typically use to

store backups. You need to provide the VPC and subnet ids in which to place

the EC2 instance, the name of the S3 bucket you wish for the instance to

access, and the SSH key you wish for the instance to use. These must be

pre-created. The launch link above launches the template in the us-west-2

region, but the template is written to support all the AWS regions.

I switch the SSH port from port 22 to port 2222 to avoid spurious SSH traffic

if you leave the SSH port accessible from 0.0.0.0/0, however you can

restrict the IP addresses allowed to access you server's SSH and docker ports

by specifying a more restrictive AdminCidrIp.

To complete installation of docker you must install Docker

Machine on the host you will use to

manage this new EC2 server. I run Docker Machine on the management node in my

network's private subnet, however when experimenting with the template above

you can use your laptop. From your management system, you install docker on

the new EC2 instance using the following command:

docker-machine create -d generic \

--generic-ip-address IP_ADDRESS \

--generic-ssh-key SSH_KEY \

--generic-ssh-port 2222 \

--generic-ssh-user ubuntu \

MACHINE_NAME

where IP_ADDRESS is the IP address of the new EC2 instance, SSH_KEY is the

file containing the SSH key you selected, and MACHINE_NAME is the name you

choose for the new instance. Note that this takes a long time to run, and the

only feedback you get is a message stating, "Importing SSH key...".

Once installation is complete, I recommend you SSH into the machine and reboot. You can connect using docker-machine with the following command.

docker-machine ssh MACHINE_NAME

When you delete the EC2 instance you must stop the docker process by SSHing in and running the command

sudo stop docker

One issue I have encountered is that the docker init script does not wait for

remote filesystems to be mounted before starting. I recommend editing

/etc/init/docker.conf so that the start on line reads

start on (remote-filesystems and net-device-up IFACE!=lo)

which is a bit redundant.

Isolation of Decrypted Data

At some point the data must be decrypted. This occurs within the EC2 instance containers. Docker's containerization helps to isolate the decrypted data from other process groups on the instance, however in order to be completely safe we use dedicated tenancy instances. This assures us that only our processes are running on our instance's physical processor. Occasionally there are reported exploits which suggest that there is the possibility of an attack due to leakage of data from one virtual machine to another on the same physical hardware.

Conclusion

The cloud is an amazing resource which allows us to access a tremendous amount of processing power at a minimal expense, however we must remain aware of the fact that in using the cloud we are using a shared space. It was much easier to be certain that a connection was secure when we could trace the physical wire. It was easier to see that our network was isolated when we could disconnect the firewall from the internet. In the cloud even our isolated networks are shared and we should build with that in mind.